Vision Systems for Flight Guidance

This research program seeks to develop and demonstrate a completely passive, independent vision based navigation system for use in aerial environments. The objective is for the system to be independent of external infrastructure (e.g. GPS) and passive to minimise risk of detection in hostile environments. The system would be applicable to autonomous vehicles as well as to aid navigation in manned systems.

Description

Significance

There has been much focus recently on developing robust navigation systems in robotics to work in GPS denied and/or unstructured environments. This proposal does not seek to solve the generic problem of navigation in an unstructured environment, but rather to provide a reliable solution for use in the common and well ordered aerial situation where visual navigation information is rich, but nevertheless remain independent of external navigation infrastructure for robust and low-observable operations. Such a system is realisable, and promises a great leap-forward in aerial navigation for manned and unmanned systems.

Strategy

The basic principle is to use vision cameras to provided localisation of the vehicle by visual detection and tracking of natural and man-made ground features en-route. The system will combine the best features of SLAM (Simultaneous Localisation and Mapping) for short-term navigation precision, with TAN (Terrain Aided Navigation – defined in terms of a knowledge base of identifiable ground based features, rather than terrain matching) where appropriate, as well as visual attitude determination. A critical element in precision feature-based navigation is attitude precision. This is important because any attitude uncertainty will give rise to altitude-dependant position estimation errors. While most systems rely solely on integration of rotation rate measurements from an IMU (which is subject to drift), this system inherently incorporates a visual horizon detection system for precision attitude measurement.

The strategy is, on the basis of a good attitude fusion result, to integrate all sources of visual navigation information to produce a robust navigation solution. The first step is to use SLAM via temporary tracking of less significant features in the environment to limit the normal quadratic dead-reckoning drift from an IMU. These features are objects or regions that have definite and repeatably trackable visual characteristics, but which are not significant enough to log in a database. When more definite features are detected, such as man-made objects like roads, road junctions and water bodies that are repeatably detectable and have definite location characteristics, these are integrated into the SLAM database if they are not already logged. If the features are significant and already exist in a database of known navigation features, then their known position (and precision) is integrated into the navigation fusion algorithm to dramatically improve the precision of the navigation solution (this is feature matched terrain aiding). An additional source of localisation information is obtained via horizon profile shape matching to terrain database information when a sufficient horizon profile is detectable. This pragmatic approach of integration will give rise to a realisable visual navigation system and for all intents and purposes, mimics human pilot navigation performance under VFR (Visual Flight Rules).

Terrain-Aided Navigation using Human-Recognisable Features

In the recent years, there has been a large focus on developing vision-aided systems to provide aiding measurements for navigation systems. Vision based methods have the advantages of being cheap, passive, and not subject to the same limitations as GPS. The use of optical sensors can increase the autonomy and reliability of the navigation systems. There have been systems developed which use various features as the reference navigation feature. Some of these features include SIFT (Scale-Invariant Feature Transform) or SURF (Speeded-Up Robust Feature) key points, blob features, image template matching, and the detection of artificial markers placed in the environment. Most of the visual features that are used are either artificial (placed in the environment to facilitate the experiment) or computer-recognisable features such as SIFT, SURF, blob or image template features (all of which are sometimes referred to as natural features). It is advantageous to investigate using human-recognisable features (such as rivers, roads, and buildings) as these are the features which pilots successfully use to navigate in VFR (Visual Flight Rules) conditions. The use of higher level features can increase the robustness of the navigation process by providing additional information that can be used in the data association stage.

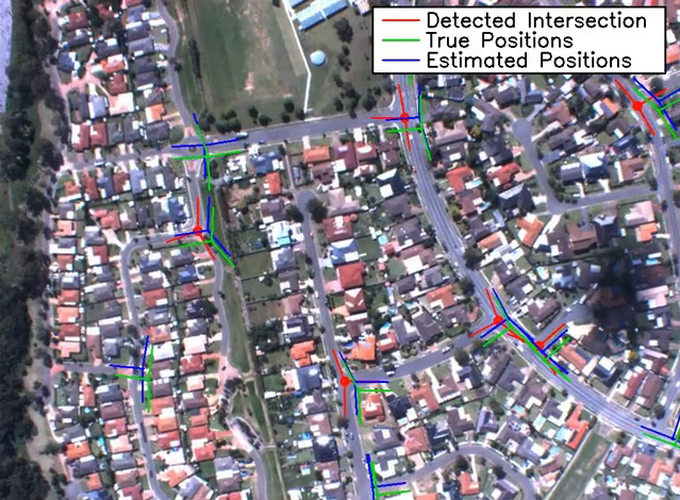

Higher level features such as road intersections or junctions have been selected to be investigated as a navigation feature in this research project. Road intersections are very common, stable, and obvious landmark in urban areas and are readily mapping in GIS databases. The intersection road branches provide very useful data association information and the center of the intersection provides a discrete point feature.

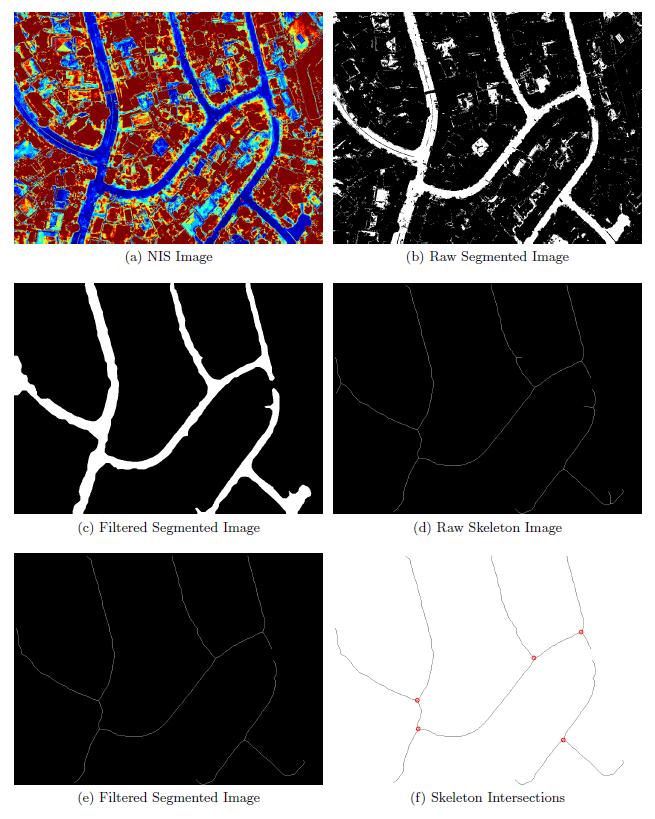

A road intersection detection algorithm was developed and flight tests of the visual fusion process were undertaken to prove the usefulness of this visual navigation method. The following images show an example of the road intersection image processing method used to extract the intersections.

Video

A video is available of the road intersection navigation system in operation from a test flight conducted with the Jabiru test platform.